FaceAdapter

Abstract

We introduce FaceAdapter, an efficient and effective adapter designed for high-precision and high-fidelity face editing for pre-trained diffusion models. We observe that both face reenactment/swapping tasks essentially involve combinations of target structure, ID and attribute. We aim to sufficiently decouple the control of these factors to achieve both tasks in one model. Specifically, our method contains: 1) A Spatial Condition Generator that provides precise landmarks and background; 2) A Plug-and-play Identity Encoder that transfers face embeddings to the text space by a transformer decoder. 3) An Attribute Controller that integrates spatial conditions and detailed attributes.

Introduction

Task explanation

- Face reenactment: transfer the target motion back to the source identity and attributes

- face swapping: o transfer the source identity onto the target motion and attributes

Problem: Emphasis current GAN-Based frameworks drawbacks

Existing methods:

FADMandDiffSwaphave attempted to address these challenges by leveraging the powerful generative capabilities of the diffusion models.FADMrefines the results of GAN-based reenactment methods, which improves image quality but still fails to resolve the blurring issue caused by large pose variation.DiffSwapproduces blurry facial outcomes due to the lack of background information during training, which hampers model learning.InstantIDandIPAdateprhave introduced face editing adapter plugins for large pre-trained diffusion models. However, these approaches primarily focus on attribute editing using text, which inevitably weakens spatial control to ensure text editability.InstantIDuse five points to control facial poses, limiting their ability to control expressions and gaze precisely. On the other hand, direct inpainting with masks of the face area does not take into account facial shape changes, leading to a decrease in identity preservation.

Propose new method: To address the above challenges, we are committed to developing an efficient and effective face editing adapter (Face-Adapter) for pre-trained diffusion models, specifically targeting face reenactment and swapping tasks.

The design motivation of Face-Adapter is threefold:

- Fully disentangled ID, target structure, and attribute control enable a ’one-model-two-tasks’ approach;

- Addressing overlooked issues;

- Simple yet effective, plug and play.

Specifically, the proposed Face-Adapter comprises three components:

Spatial Condition Generator is designed to automatically predict 3D prior landmarks and the mask of the varying foreground area, which provides more reasonable and precise guidance for subsequent controlled generation. In addition, for face reenactment, this strategy mitigates potential problems that could occur when only extracting the background from the source image, such as inconsistencies caused by alterations in the target background due to the movement of the camera or face objects; For face swapping, the model learns to maintain background consistency, glean clues about global lighting and spatial reference, and try to generate content in harmony with the background.

Identity Encoder uses the pre-trained recognition model to extract face embeddings and then transfers them to the text space by learnable queries from the transformer decoder. This manner greatly improves the identity consistency of the generated images.

Attribute Controller includes two sub-modules: The spatial control combines the landmarks of target motion with the unchanged background obtained from the Spatial Condition Generator. The attribute template supplements the absent attribute, encompassing lighting, a portion of the background, and hair. Both two tasks can be perceived as a procedure that executes conditional inpainting, utilizing the provided identity and absent attribute content. This process adheres to the stipulations of the given spatial control, attaining congruity and harmony with the background.

Summarize contributions:

We introduce Face-Adapter, a lightweight facial editing adapter designed to facilitate precise control over identity and attributes for pre-trained diffusion models. This adapter efficiently and proficiently tackles face reenactment and swapping tasks, surpassing previous state-of-the-art GAN-based and diffusion-based methods.

We propose a novel Spatial Condition Generator module to predict the requisite generation areas, collaborating with the Identity Encoder and Attribute Controller to frame reenactment and swapping tasks as conditional inpainting with sufficient spatial guidance, identity, and essential attributes. Through reasonable and highly decoupled condition designs, we unleash the generative capabilities of pre-trained diffusion models for both tasks.

Face-Adapter serves as a training-efficient, plug-and-play, face-specific adapter for pre-trained diffusion models. By freezing all parameters in the denoising U-Net, our method effectively capitalizes on priors and prevents overfitting. Furthermore, Face-Adapter supports a "one model for two tasks" approach, enabling simple input modifications to independently accomplish superior or competitive results of two facial tasks on VoxCeleb1/2 datasets.

Related Work

Face Reenactment involves extracting motion from a human face and transferring it to another face which can be broadly divided into warping-based and 3DMM-based methods.

- Warping-based methods [14, 15, 28,29,32,48] typically extract landmarks or region pairs to estimate motion fields and perform warping on the feature maps to transfer motions. When dealing with large motion variations, these methods tend to produce blurry and distorted results due to the difficulty in predicting accurate motion fields

- 3DMM-based methods [24] use facial reconstruction coefficients or rendered images from 3DMM as motion control conditions. The facial prior provided by 3DMM enables these methods to obtain more robust generation results in large pose scenarios. Despite offering accurate structure references, it only provides coarse facial texture and lacks references for hair, teeth, and eye movement. . StyleHEAT [40] and HyperReenact [2] use StyleGAN2 to improve generation quality. However, StyleHEAT is limited by the dataset of frontal portraits, while HyperReenact suffers from resolution constraints and background blurring.

- Diffusion Models

FADMcombines the previous reenactment model with diffusion refinements but the base model limits the driving accuracy. Recently, AnimateAnyone [17] employs heavy texture representation encoders (CLIP and a copy of U-Net) to ensure the textural quality of animated results, but this manner is costly. In contrast, we aim to leverage the generative capabilities of pre-trained T2I diffusion models fully and seek to comprehensively overcome the challenges presented in previous methods, e.g., low -resolution generation, difficulty in handling large variations, efficient training, and unexpected artifacts.

Face Swapping aims to transfer the facial identity of the source image to the target image, with other attributes (i.e., lighting, hair, background, and motion) of the target image unchanged. Recent methods can be broadly classified into GAN-based and diffusion-based approaches.

- GAN Based: Despite promising improvement, these methods often produce noticeable artifacts when dealing with significant changes in face shape or occlusions.

- Diffusion Based: utilize the generative capabilities of the diffusion model to enhance sample quality. However, the numerous denoising steps during inference significantly increase the training costs when using attribute-preserving loss. DiffSwap [49] proposes midpoint estimation to address this issue, but the resulting error and the lack of background information for inpainting reference lead to unnatural results.

Face-Adapter ensure image quality only relying on:

- the denoise loss with complete disentanglement of the control of the target structure, ID and other attributes.

- reduces training costs by freezing all of U-Net’s parameters, which also preserves prior knowledge and prevents overfitting.

Textual Inversion and Dream Booth insert

identity by using optimization or fine-tuning manners.

IP-adapter(-FaceID)[39] and InstantID [31]

fine-tune only a few parameters. The latter achieves robust identity

preservation. However, as a tradeoff for text editability,

InstantID could only apply weak spatial control. Therefore,

it struggles with fine movements (expression and gaze) in face

reenactment and swapping. By comparison, our Face-Adapter is an

effective and lightweight adapter designed for pre-trained diffusion

models to accomplish face reenactment and swapping simultaneously.

Methods

Spatial Condition Generator

3D Landmark Projector:

To surmount alterations in facial shape, we utilize a 3D facial reconstruction method [8] to extract the identity, expression individually and pose coefficients of the source and target faces. Subsequently, we recombine the identity coefficients of the source with the expression and pose coefficients of the target, reconstruct a new 3D face, and project it to acquire the corresponding landmarks.

Adapting Area Predictor:

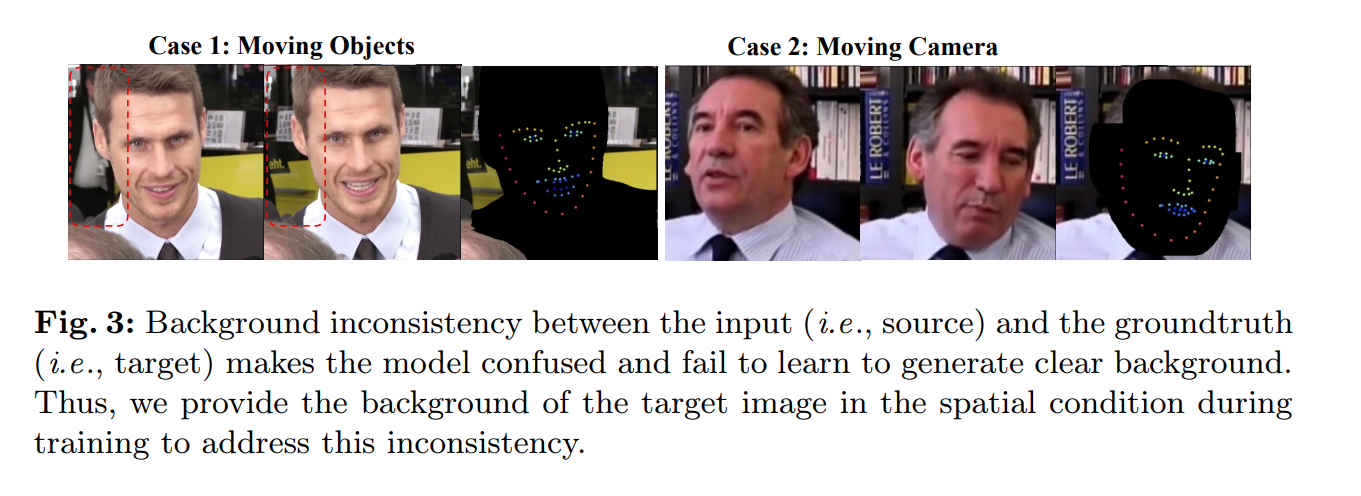

For face reenactment, prior methods assume that only the subject is in motion, while the background remains static in the training data. However, we observe that the background actually undergoes changes, encompassing the movement of both the camera and objects in the background, as illustrated in Fig. 3. If the model lacks knowledge of the background motion during training, it will learn to generate a blurry background. For face swapping, supplying the target background can also give the model clues about environmental lighting and spatial references. This added constraint of the background significantly diminishes the difficulty of the model learning, transitioning it from learning a task of generating from scratch to a task of conditional inpainting. As a result, the model becomes more attuned to preserving background consistency and generating content that seamlessly integrates with it.

In view of the above, we introduce a lightweight Adapting Area Predictor for both face reenactment and swapping, automatically predicting the region the model needs to generate (the adapting area) while maintaining the remaining area unchanged. For face reenactment, the adapting area constitutes the region occupied by the source image head before and after reenactment.

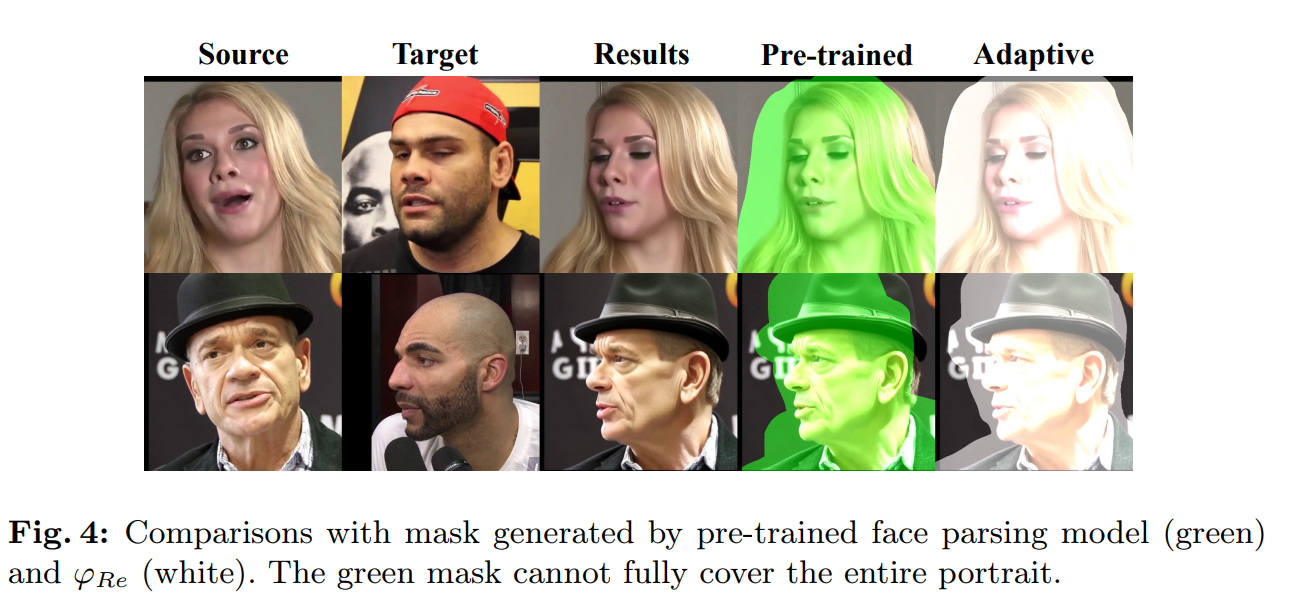

We train a mask predictor \(\varphi_{R e}\) that accepts the target image \(I^T\) and motion landmarks \(I_{l m k}\) from the 3D Landmark Projector to predict the adapting area mask \(M_{R e}^{f g}\). The mask ground truth \(M_{R e}^{g t}\) is generated by taking the union of the head regions (including hair, face, and neck) of the source and target, followed by outward dilation. Head regions are obtained using a pre-trained face parsing model [41]. It should be noted that we cannot directly utilize the pre-trained face parsing model in face reenactment. As shown in Fig. 4 row 4, when the portrait area of the source image is larger (e.g., long hair and hat) than that in the target image, the green mask created by the pre-trained parsing model cannot fully cover the entire portrait and may result in artifacts at the boundary. However, the white mask created by \(\varphi_{R e}\) in Fig. 4 row 5 can encapsulate the whole portrait, as \(\varphi_{R e}\) merely uses the source image and 3D landmarks as input, and exhibits excellent generalization when the source and target images possess different identities.

Identity Encoder

Current work: As demonstrated by IP-Adapter-FaceID [39] and InstantID [31], a high-level face embedding can ensure more robust identity preservation.

Improvement: As we observed, there is no need for heavy texture encoders [17] or additional identity networks [31] in face reenactment/swapping.

Solution: By merely tuning a lightweight mapping module to map the face embedding into the fixed textual space, identity preservation is guaranteed. ID is generated by

ArcFace. A three layer transformer decoder is employed to project the face embedding is employed to project the face embedding. (77 token in paper, not to exceed the maximum token input of SD1.5).

Attribute Controller

- Spatial Control:

In line with ControlNet [46], we create a copy of U-Net \(\phi_{C t l}\) and add spatial control \(I_{S p}\) as the conditioning input. The spatial control image \(I_{S p}^S / I_{S p}^T\) is obtained by combining the target motion landmarks \(I_{l m k}^T\) and the non-adapting area obtained by the Adapting Area Predictor \(\varphi_{R e}\left(\right.\) or \(\varphi_{S w}\) ). The I is Image. \(I_{S p}^S=I^S *\left(1-M_{R e}^{f g}\right)+I_{l m k}^T\), for face reenactment, \(I_{S p}^T=I^T *\left(1-M_{S w}^{f g}\right)+I_{l m k}^T\), for face swapping.

- Attribute Template:

Given identity and spatial control with part of the background, the attribute template is designed to supplement the missing information, including lighting and part of the background and hair. Attribute embeddings \(f_{\text {attr }} \in \mathbb{R}^{257 * d}\) are extracted from the attribute template ( \(I^S\) for reenactment and \(I^T\) for swapping) using CLIP \(E_{\text {clip }}\). To simultaneously obtain local and global features, we use both the patch tokens and the global token. The feature mapper module is also constructed as a three-layer transformer layer \(\varphi_{\text {dec }}\) with learnable queries \(q_{\text {attr }}=\left\{q_1, q_2, \cdots, q_K\right\}, K=77\).

- Strategies for Boosting Performance

- Training:

- Data: For both reenactment and face-swapping tasks, we use two images of the same person in different poses as source and target images

- Condition Dropping for Classifier-free Guidance: The conditions we need to drop include identity tokens and attribute tokens input into the U-Net and ControlNet cross-attention. We use a 5% probability to simultaneously drop identity tokens and attribute conditions to enhance the realism of the image. To fully utilize the identity tokens for generation face images and improve identity preservation, we use an additional 45% probability to drop attribute tokens.

- Inference:

- Negative Prompt for Classifier-Free Guidance: For reenactment, negative prompts of both identity and attribute tokens are empty prompt embeddings. For face-swapping, to overcome the negative impact of the target identity in attribute tokens, we use the identity tokens of the target image as the negative prompt for identity tokens.

- Training:

Experiments

With the powerful generation capability of the pre-trained SD model, we can naturally complete the regions with facial shape variations.

Furthermore, by using the identity tokens of the target image as a negative prompt during face-swapping inference, we further enhance the identity similarity with the source face.

Datasets:

- Train: VoxCeleb1 and VoxCeleb2 [6] dataset. We train our face-adapter for 70,000 steps on 8×V100 NVIDIA GPUs with a constant learning rate of 1e-4 and a batch size of 32.

- Eval: leverage the 491 test videos from the VoxCeleb1 and randomly sample 1,000 images.

Results:

As a result, compared to other GAN based methods and diffusion-based methods trained from scratch like FADM, our method is capable of generating faithful attribute details, i.e., hair texture, hat, and accessories, that are consistent with the source image.

Limitations

unable to achieve temporal stability in video face reenactment/ swapping, which requires incorporating additional temporal fine-tuning in the future.